Contrary Research Rundown #140

Tesla, Waymo, and the great sensor debate, plus new memos on Osmo, ThoughtSpot, Workato, and more

Research Rundown

A few weeks ago, we unpacked the history and potential future for autonomous vehicles in our deep dive "The Trillion-Dollar Battle To Build a Robotaxi Empire.” Now, the battle to become the leading robotaxi company is heating up, and it looks like Tesla’s long-awaited robotaxi launch in Austin is imminent.

Originally scheduled for June 12, 2025, Elon Musk this week announced a delay to June 22nd and noted that even this new date could shift because Tesla is “being super paranoid about safety.”

Elon has a right to be concerned about safety. Waymo has a stellar safety record so far, but it uses a suite of sensors across cameras, lidar, and radar, similar to almost all other major robotaxi companies. Tesla, on the other hand, has been an industry outlier by relying on cameras alone, with Elon arguing that lidar/radar is too expensive, not as scalable, and not necessary for safety.

With Tesla’s robotaxi launch approaching, the stakes have never been higher, and the industry’s long-standing sensor debate is about to face its most significant test yet.

The AV Sensor Stack

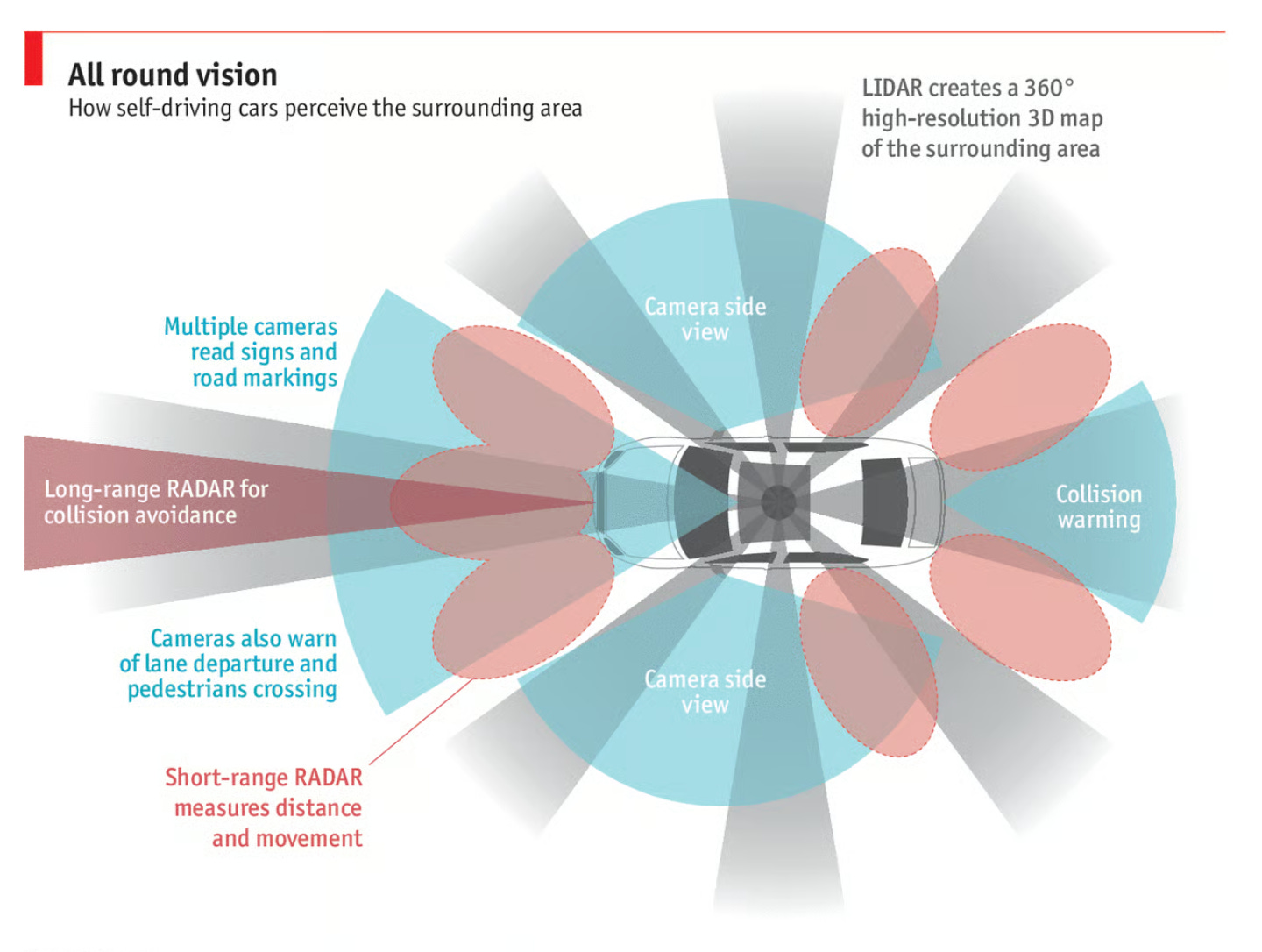

Sensors are one of the most crucial technologies required to operate a self-driving car. Almost all leading robotaxi companies today from Waymo to Baidu, Zoox, and Pony.ai utilize a combination of sensors to obtain a comprehensive view of the surrounding world, maximize the available data for informed decisions, and ensure redundancy in case some sensors are not functioning or are blocked. These sensors include:

Cameras (for object detection and classification)

Radar (for reliable distance and speed measurements)

Lidar (for high-precision 3D mapping and depth perception)

Ultrasonic sensors (for close-range detection in parking and low-speed maneuvering)

Microphones and audio sensors (such as Waymo's External Audio Receivers or EARs, which detect emergency vehicle sirens and honking)

Tesla’s Contrarian Bet on Cameras

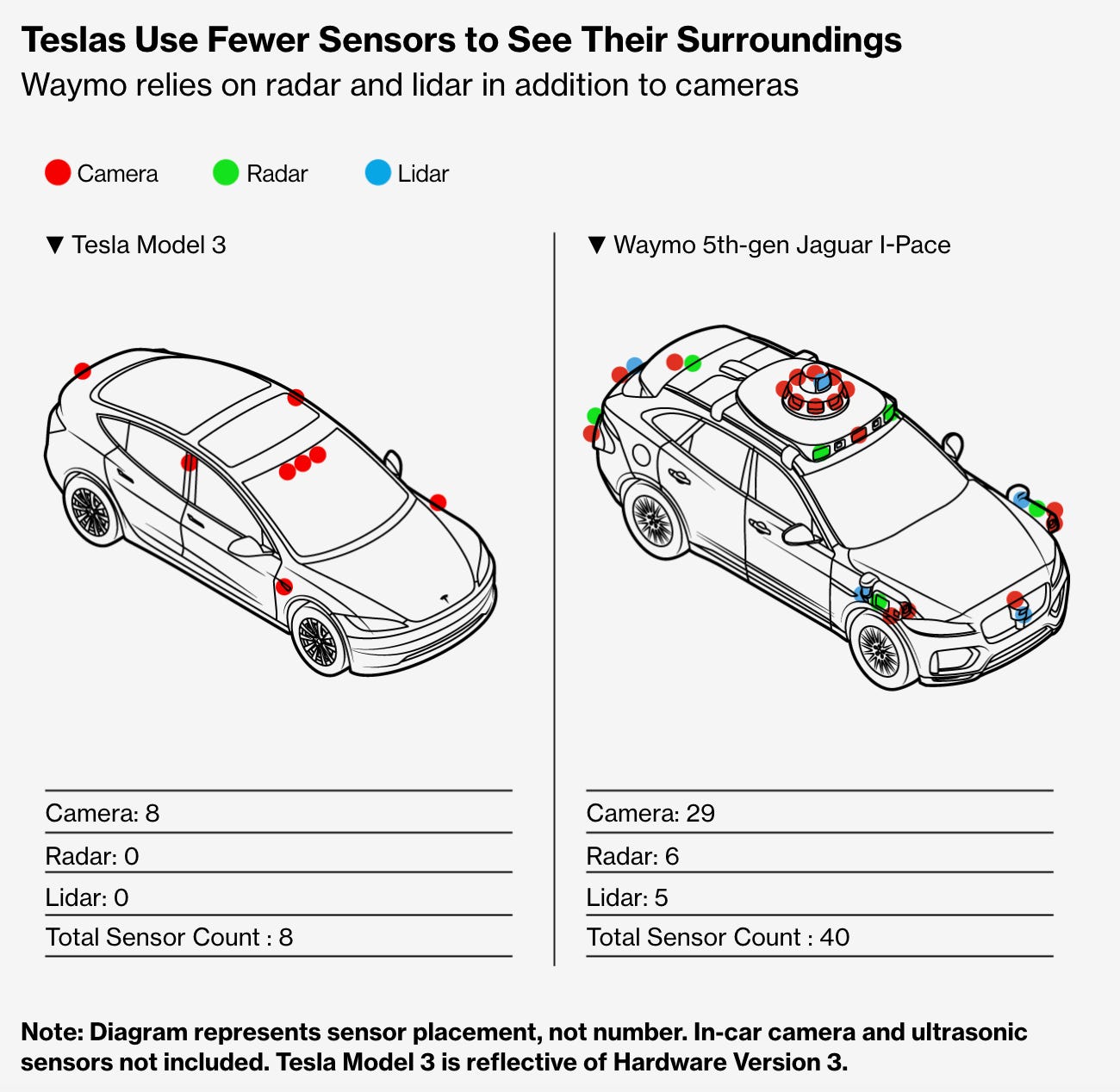

Tesla has taken a fundamentally different approach. It does not use lidar or radar and instead relies entirely on eight cameras to make driving decisions. In contrast, Waymo’s fifth-generation car has 29 cameras, six radar sensors, and five lidar sensors.

Why such a dramatic difference? As early as 2013, Elon expressed skepticism about the need for lidar in autonomous vehicles. Elon framed the reason in a rather intuitive way in 2021: if humans can rely on their eyes and brain, then self-driving cars can rely on cameras and AI.

“Humans drive with eyes & biological neural nets, so makes sense that cameras and silicon neural nets are only way to achieve generalized solution to self-driving.”

Elon remains skeptical of lidar today. When pressed on an earnings call in early 2025 about whether he still sees lidar as a “crutch,” he reiterated his all-camera approach by saying, “Obviously, humans drive without shooting lasers out of their eyes”, a reference to the mechanism by which lidar maps the surrounding area.

In addition to being seen as unnecessary, another reason Tesla has avoided using lidar is the cost. One 2024 report estimated Tesla’s sensor suite costs just $400 per vehicle, compared to an estimated $12.7K per vehicle for Waymo’s sensors on its fifth-generation Jaguar SUVs. This is not the only reason Waymo’s vehicles are estimated to cost significantly more than Tesla’s, but it’s an important consideration, especially when Tesla is targeting a $30K all-in cost for its fully autonomous Cybercab.

Beyond sensor costs, Tesla's camera-only approach delivers another advantage: it eliminates the need for the expensive high-definition mapping that competitors rely on. Companies like Waymo follow a multi-step process where they first deploy vehicles with safety drivers to record and map the area, which can take months for each new city and requires continuous updates. Waymo and companies like it then use these predefined maps to complement their real-time sensor data from lidar/radar about the surrounding area. Tesla, by contrast, claims its software can operate anywhere without pre-mapped data, relying entirely on real-time camera input to understand road conditions.

Are Cameras Alone Safe Enough?

Tesla’s approach may be cheaper and easier to scale, but other robotaxi companies (like Waymo) have long had doubts that a camera-only approach can deliver the safety levels needed to successfully deploy autonomous vehicles at scale.

At Google I/O in May 2025, Waymo showed a few examples where its full suite of sensors successfully avoided pedestrians and where it claims a camera-only approach would have struggled.

In one example, Waymo's lidar picked up the presence of a pedestrian in a Phoenix dust storm that was not visible on the camera.

In another example, Waymo’s sensors were able to detect a pedestrian who was behind a bus and avoid a collision:

“We are detecting a pedestrian on the other side of the bus. That would be completely occluded to a human driver. So what's happening here is that our sensors are able to pick up the movement of the person's feet under the bus. And just that little bit of noisy and sparse signal is enough for the Waymo Driver to detect that there's a pedestrian there and, furthermore, to predict what they're going to do in the future, allowing us to take a defensive action early.”

Beyond these anecdotes, Waymo has the safety record and data to support its claims that its sensor suite has led to enhanced safety. Waymo has only had one fatal accident in its history, and not due to a Waymo error. In January 2025, a Tesla struck an unoccupied Waymo and other cars at a red light, killing one person. As we wrote in our last piece, one study by Swiss Re shows Waymo saw an 88% reduction in property damage claims and a 92% reduction in bodily injury claims when compared to human-driven vehicles.

Tesla does not have comparable data yet, but there have been incidents in recent years where some have claimed its lack of additional sensors has contributed to accidents. For example, in 2023, a Tesla in Full Self Driving mode (FSD) hit a 71-year-old woman at highway speed, killing her. Video of the crash shows a sun glare appearing to blind the camera, and the National Highway Traffic Safety Administration (NHTSA) opened an investigation into Tesla in October 2024 for four total FSD collisions that occurred in low visibility situations.

The Changing Economics of Lidar

Another tailwind for Waymo’s approach has been the falling cost of lidar. When Elon first called lidar too expensive in the early 2010s, it cost ~$75K per unit. Since then, costs have fallen dramatically, and some lidar units sold for personal vehicles (not robotaxis) are being priced in the hundreds of dollars. Chinese lidar maker Hesai is pricing its units around $200 each, and US-based Luminar Technologies is pricing its devices at around $500 each.

Another example is Mobileye, which develops self-driving software and hardware for personal vehicles and robotaxis. In late 2024, it stopped production of its custom lidar technology in part due to the “continued better-than-expected cost reductions in third-party” lidar units.

By one estimate, lidar costs have fallen by 99% since 2014. Further reductions in these costs would help narrow the current large gap between Waymo and Tesla’s sensor suite costs and call into question Elon’s argument that lidar is too costly.

Austin: Putting Tesla To The Test

There are plenty of other problems both Waymo and Tesla need to solve to successfully deploy self-driving cars (operations, vehicle building, regulations, fleet management, etc.). But Tesla’s first goal in its robotaxi pilot in Austin will be to show it can achieve comparable levels of safety to Waymo’s approach without the need for the same sensor set and extensive mapping.

If it can, Tesla is in a uniquely advantageous position to scale, given its vertically integrated approach and ability to operate in any area without pre-mapping. If it struggles, it will raise questions about whether its camera-only strategy is the right one long term.

Osmo is an olfactory intelligence company developing the first scalable system for reading, mapping and reproducing scent, with the company claiming the first successful digital capture and re-synthesis of a naturally occurring smell. To learn more, read our full memo here and check out some open roles below:

Fragrance or Flavor Compounder - Elizabeth, NJ

Regulatory Specialist, Fragrance Manufacturing - Elizabeth, NJ

ThoughtSpot offers a platform designed to address specific limitations of traditional business intelligence tools. ThoughtSpot’s platform allows users to query data using natural language and integrates with modern cloud data stacks. To learn more, read our full memo here and check out some open roles below:

Solutions Engineer - Mountain View, CA

Workato is positioning itself to meet the rising need for unified automation and integration platforms with its cloud-native, low-code iPaaS that combines consumer-grade usability with enterprise-grade scalability. To learn more, read our full memo here and check out some open roles below:

Senior Software Engineer (Search) - Palo Alto, CA

Senior UX Designer (Builder Experience) - Palo Alto, CA

Check out some standout roles from this week.

Character AI | San Francisco, Palo Alto or New York - Software Engineer (Core Product), Software Engineer (Growth), Software Engineer (Safety), Research Engineer (Machine Learning Infrastructure)

Mercury | San Francisco, New York, Portland, or Remote (US/Canada) - Senior Backend Engineer (Product), Senior Full-Stack Engineer, Senior Software Engineer (Growth Infrastructure)

Anyscale | San Francisco or Palo Alto - Software Engineer (Product Platform), Software Engineer (Release & Engineering Efficiency), Software Engineer (ML Developer Experience)

Meta bets $14.3 billion on Scale AI to reboot its AI strategy. The 49% non-voting stake gives Meta access to Scale’s data-labeling business and installs Scale CEO Alexandr Wang to lead a new “Superintelligence” lab under Mark Zuckerberg at Meta.

Glean raises at a $7.2 billion valuation with $150 million Series F. The workplace AI startup tripled its valuation in just 16 months and topped $100 million ARR, highlighting surging demand for LLM-powered search and agent tools in the enterprise stack.

Sam Altman declares AI takeoff, predicts superintelligence soon. In a new post, OpenAI CEO Sam Altman says the “singularity has started,” with 2025 marking the rise of cognitive agents and 2026-2027 expected to deliver novel scientific insights and humanoid robots.

Chime IPOs, surges 37% in its Nasdaq debut. The digital bank finished its first trading day at $37 per share, a rise of 37%. This equates to a $13.5 billion market cap, a decline from the $25 billion valuation it had in its last private fundraise in 2021.

Meta AI’s public feed sparks privacy alarm. Business Insider calls the app’s “Discover” stream the internet’s saddest place after finding users unintentionally broadcasting intimate legal, medical, and grief-related chats.

OpenAI unveils o3-pro, its most capable reasoning model yet. The model outperforms Google’s Gemini 2.5 Pro and Anthropic’s Claude 4 Opus on math and science benchmarks while adding web, vision, and file-analysis tools.

Indian investigators probe Air India 787 crash; one survivor. The AAIB, aided by the U.S. NTSB and U.K. experts, recovered the black box after Flight 171 slammed into a six-story building seconds after takeoff from Ahmedabad, killing 241 people.

Anduril’s ex-engineering chief lifts the curtain on its rapid-fire defense factory. In a Colossus Review essay, former SVP Adnan Esmail recounts how the startup scaled by sprint-prototyping off-the-shelf hardware, matrixing 550 “badass” engineers across 30 product lines, and enforcing ruthless talent density.

Jensen Huang criticized Anthropic CEO Dario Amodei’s AI doomsday warnings. Huang said Amodei claims AI is so scary, so expensive, and so powerful that only Anthropic should be building it, which Huang argues stifles open, transparent innovation.

Runway pitches AI video as Hollywood ally, not threat. In an interview with The Verge, CEO Cris Valenzuela said Runway’s Gen-4 model slashes storyboarding and VFX timelines from weeks to minutes, predicting cheaper, faster workflows will democratize high-quality filmmaking as studios like Lionsgate and AMC test the tech.

Weight-loss drugs bite into McDonald’s prospects. Redburn Atlantic downgraded the fast food giant to “sell,” estimating GLP-1 medications such as Ozempic could wipe out 28 million visits and $482 million in annual sales for the fast food chain. One study already shows these drugs dampening food demand.

Misaligned incentives keep ed-tech stuck, argues Replit’s Gian Segato. Because parents or institutions foot the bill while students consume, K-12 products chase compliance over learning; adult apps avoid the needed “pain” of mastery and slide into feel-good edutainment. AI’s mass personalization might square effectiveness with scale, but high costs and big-platform competition still cloud the path to viable business models.

Google Cloud outage ripples across the web. On June 12, a multi-region Google Cloud disruption took 13 services offline, knocking out or degrading Shopify, OpenAI, Twitch, GitHub, Cloudflare, and more. The hours-long incident underscores the internet’s dependence on a handful of hyperscalers and complicates Google’s push to match AWS and Azure on reliability.

At Contrary Research, we’ve built the best starting place to understand private tech companies. We can't do it alone, nor would we want to. We focus on bringing together a variety of different perspectives.

That's why applications are open for our Research Fellowship. In the past, we've worked with software engineers, product managers, investors, and more. If you're interested in researching and writing about tech companies, apply here!