Contrary Research Rundown #141

Making the case for cautious optimism on AI in the classroom, plus new memos on Mux, Krea, and more

Research Rundown

Throughout history, the introduction of new technology was quickly followed by concerns about its potential impact on learning. In 370 BC, Socrates lamented that the technology of writing itself would weaken the necessity and power of memory and allow people to appear wise without actually understanding things, saying:

“For this invention will produce forgetfulness in the minds of those who learn to use it, because they will not practice their memory… You have invented an elixir not of memory, but of reminding; and you offer your pupils the appearance of wisdom, not true wisdom…”

The same fear has repeated through centuries, when people worried about the potential negative impact of the printing press, calculator, and the internet on people's ability to learn and adequately develop their cognitive faculties.

However, in the US, concerns about education throughout the 21st century thus far have been more about outcomes than disruptions.

Math scores in the US peaked in 2009 before seeing a consistent decline over the next decade. Reading and mathematics scores for 9-13-year-olds climbed through the late 20th century and into the early 2000s, but then stalled around the early 2010s, and have since declined. Across the board, educational outcomes in the US have dropped dramatically since school shutdowns in 2020.

One meta-analysis reported that global learning losses during the COVID-19 pandemic were equivalent to 0.11–0.14 standard deviations, basically 4–7 months of lost learning for key subjects. The National Assessment of Educational Progress (NAEP) says student reading scores reached the lowest ratings recorded since the organization started recording them in 1992. According to those results, “a full third of kids couldn’t show ‘basic’ reading skills expected for their age group.” The educational loss between 2019 and 2022 is the equivalent of wiping out decades of educational gains.

Despite the consistent decline in educational quality, the reality is that one clear solution has been understood for decades. But that solution, until recently, was only available to the select few.

Bloom’s Problem

In 1984, Benjamin Bloom, an educational psychologist, authored a paper entitled “The 2 Sigma Problem.” Bloom demonstrated that 1:1 tutoring using mastery learning led to a two standard deviation improvement in student performance. Mastery learning means students stay on a concept until they can prove they’ve truly mastered it before moving on. Scoring two standard deviations represents dramatic improvement; the equivalent of taking someone at the 50th percentile and getting them to the 98th percentile.

In the paper, Bloom compared conventional education, mastery learning, and tutorial instruction. While mastery learning led to measurable gains, 1:1 tutoring produced dramatically greater improvements.

Such material improvements weren’t just apparent in the highest-performing students. Bloom’s work demonstrated that 1:1 tutoring improved outcomes for 80% of the students across the study population. These results seemed to show that almost any student had the potential to achieve mastery on a given topic, and that the only thing standing in their way was a lack of access to the right method of instruction rather than an inherent lack of ability.

Such exceptional results raise the question of why Bloom’s findings weren’t implemented universally. Why was Bloom’s paper called “the 2 sigma problem” instead of “the 2 sigma solution”? As Bloom explained in his paper, instead of widespread adoption of tutorial learning, the result of his work would be on finding “more practical and realistic conditions than the one-to-one tutoring, which is too costly for most societies to bear on a large scale.”

Despite the overwhelming success of individualized education via 1:1 tutoring, the cost and complexity made tutoring too difficult to scale effectively. That is, until AI.

AI’s Solution

Individual tutoring has, historically, been a tall order. Tutors needed context on both the individual’s learning status and the subject material, as well as the ability to articulate a lesson plan into a coherent structure.

To be able to do that individually for 50 million students seemed to be the stuff of science fiction. Neal Stephenson’s 1995 novel, The Diamond Age, for example, portrayed a device called "the Primer" described as "an interactive, pseudo-intelligence-powered, personalized educational device that serves up stories, puzzles, and lessons that grow and evolve with the reader. [The device] transformed its owner, Nell, from the lower-class child of a single mother into the leader of her own phyle, or tribe.” Personalized educational AI was a dream; not something that we could have in the real world.

But, increasingly, science fiction is becoming science fact. Today, AI is uniquely suited for exactly the kind of personalized 1:1 tutoring that provided such rapid educational strides in Bloom’s research. It's fast, cheap, widely accessible via smartphones and laptops, and its knowledge base comprises the sum total of human knowledge.

Though the data is still early, one May 2025 meta-analysis of 51 experiments and quasi-experiments published between November 2022 and February 2025 found access to tools like ChatGPT had a large overall effect on learning performance, a 0.87 standard deviation improvement, and smaller, positive effects on learners’ perceptions and higher-order thinking when ChatGPT was used as part of instruction.

While this is not yet at the level of Bloom’s two standard deviations of improvement, access to AI is just getting started. As AI tutoring products improve and educational programs utilize them more fully, the data will only become more accessible. But before AI can fully realize any potential impact it may have in education, it will first have to pass the educational purity test.

Educational Purity Rears Its Head

Staring down the barrel of massive disruption from AI across all facets of people’s lives, we start to hear a resurgence of concerns like those of Socrates. Just as people worried that writing would diminish memory, print would diminish the beauty of manuscripts, calculators would diminish mental math, and the internet would diminish attention span, people now worry AI will diminish the effectiveness of education. Just like each previous era, the supporters of the status quo in education are deeply concerned.

One 2024 Pew Survey, for example, found that 25% of public K-12 teachers say using AI tools in K-12 education does more harm than good, while only 6% say it does more good than harm. Critics worry that schools will use AI to replace teachers, lead to rampant cheating, and ultimately hurt student learning.

“AI will replace teachers.”

One instinctive reaction to AI is the threat to teachers’ ability to do their jobs. For some, the concern is that AI might take the job of teachers without actually being better than the teacher. From a lack of personal empathy to an inclination towards misinformation and bias, the arguments in favor of human educators over AI are extensive.

But the reality is that teachers are a significant bottleneck in inadequate educational outcomes. One 2024 RAND study found that 60% of K-12 teachers are experiencing burnout, and 59% are frequently stressed, while 25% are considering quitting. As a result, schools have had to turn to alternative sources for educators. In 2023, 37% of teachers were inexperienced, out-of-field, or uncertified.

“AI will let students cheat.”

One of the early waves of AI use in education was for essay-writing in colleges. One report found over 7K cases of “AI cheating” in 2024. Attempts to enforce academic integrity through detection software and Trojan horses quickly proved unsuccessful, as students alert one another on social media. Moving assessments to entirely in-person is unfeasible, leaving professors feeling defeated, expressing sentiments like “My hope is that students are still learning through this process.”

However, the accusation of cheating is more an indictment of the existing educational paradigm than of AI itself. From an evaluation system rampant with implicit bias to inconsistency across both feedback and grading.

In regard to AI-fueled “cheating,” educators have proposed anti-AI honor codes, or only grading closed-book, in-class, or oral exams. But those approaches miss the point, as Tyler Cowen explains:

“The trouble with all of these remedies is that they implicitly insist that we must do everything possible to keep wasteful instruction in place. The current system is misleading students about the skills they will need to succeed in the future, and providing all the wrong incentives and rankings of student quality.”

“AI will make students dumber.”

Even if AI may alleviate teacher shortages or shed light on inadequate evaluation systems, concerns remain around AI’s potential for negative impact on people’s cognitive abilities. One 2024 study of 1K high school students in Turkey compared their learning experience across three different groups: (1) a control group with no access to AI, (2) GPT Base, which included access to a standard ChatGPT interface, and (3) GPT Tutor, which included a chat interface with teacher-supplied solutions and instructions to give incremental hints only, not full answers. At the end of the various study sessions, a final closed-book exam was administered.

When the students were given practice problems, both GPT Base (+48%) and GPT Tutor (+127%) showed large improvements compared to the baseline. But on the closed-book exam, the GPT Base group did worse than the control group (-17%) while the GPT Tutor group showed no improvement.

Another study showed that how students use LLMs impacts their understanding. Students who substitute some of their learning activities with LLMs (e.g., generating solutions to exercises) increase the volume of topics they can learn about but decrease their understanding of each topic. Students who, instead, complement their learning activities with LLMs (e.g., by asking for explanations) do not increase topic volume but do increase their understanding. That provides evidence that LLMs can enable learners when used correctly, but disadvantage learners when abused.

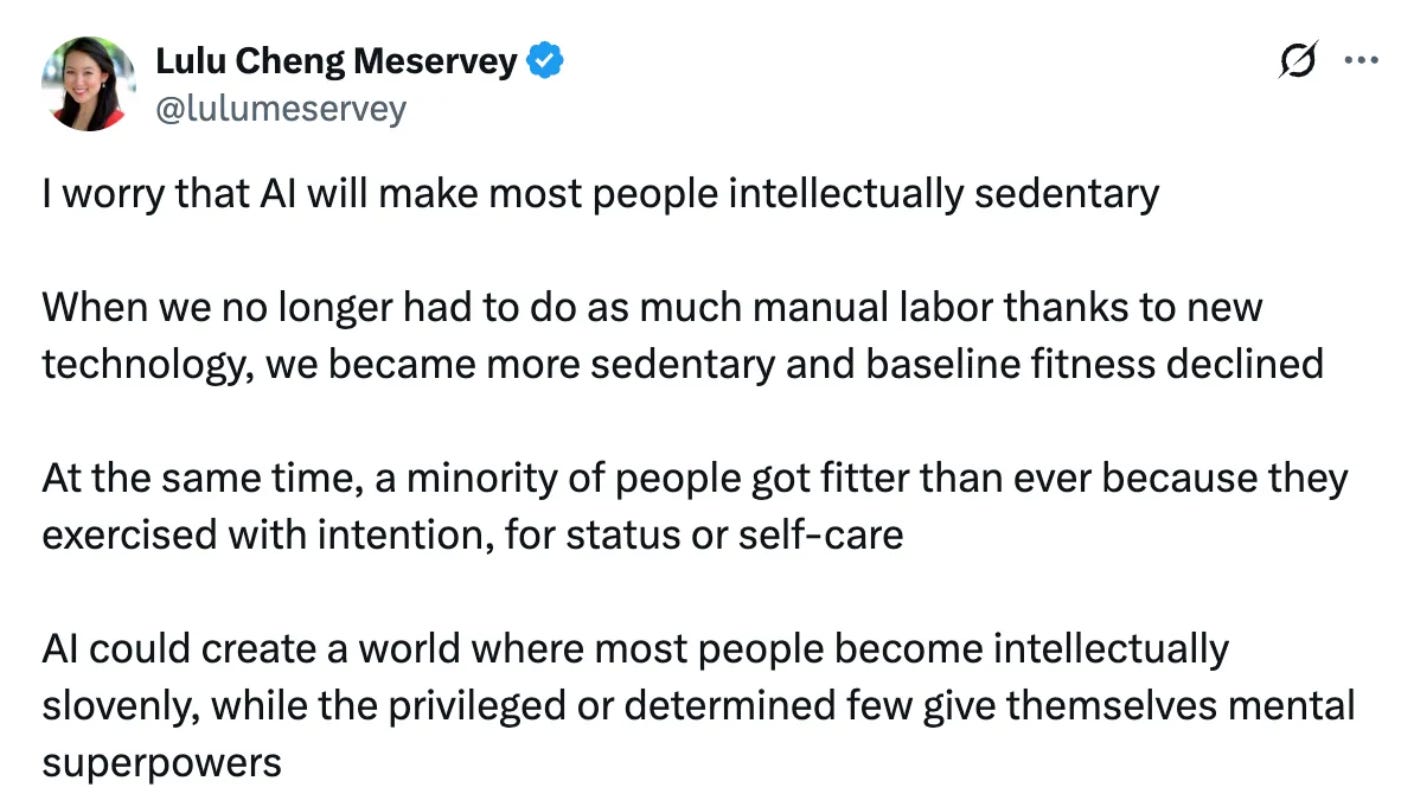

It's easy to imagine a world in which students are outsourcing too much of their thinking to AI, becoming overly dependent on it, and resulting in atrophied reasoning and critical thinking skills. Some worry about AI making people “intellectually sedentary” and harming their mental fitness just like the decline of manual labor after the industrial revolution made people more sedentary, reducing average physical fitness.

A viral paper popped off this week entitled “Your Brain on ChatGPT: Accumulation of Cognitive Debt when Using an AI Assistant for Essay Writing Task.” The conclusion of the paper was, effectively, that AI created cognitively weaker individuals. But, despite the reality that AI certainly represents a potential crutch, criticisms of the paper pointed out that the number of variables ensured that the only real conclusion was “having students copy/paste essays from LLMs vs writing the essay themselves will reduce the amount they learn from the assignment.” Others pointed out that the paper didn’t even measure “learning,” but rather the outcome of a “writing sprint.”

There’s no doubt that using any tool, from calculators to the internet to AI, could become an intellectual crutch. But it's also a reality that calculators, the internet, and AI can all take away aspects of the learning experience that aren’t necessarily beneficial for the person who is learning. What’s more, the promise of AI-native education isn’t that it replaces human learning, but that it can supercharge it.

The Promise of AI-Native Education

Despite all the concerns brought up about AI’s potential impact on learning, the reality is that the future is very much unknown. The same Pew survey where 25% of public K-12 teachers said AI would do more harm than good found that 67% of responses were either unsure about the impact of AI on education or felt that the good and bad would balance out. That is a clear majority that sees the future as being still unwritten.

AI is already being deployed more easily in educational programs that came out of more recent waves of innovation. For example, Khan Academy launched Khanmigo in March 2023 to help students with Socratic prompts rather than simply supplying answers while drawing on the site’s full content library. While there haven’t been formal studies on the efficacy of this kind of program, the product is being piloted in over 400 US school districts.

Other companies have expanded from broader education technology into AI. MagicSchool AI started by providing a set of AI tools for teachers that generate lesson plans, rubrics, and other teacher workflows. Launched in 2023, it reports more than 5 million teacher accounts across roughly 12K schools in 160 countries, covering nearly all US districts. The company then expanded in 2025 into AI tutoring with MagicSchool for Students. From instructor-informed resource bots to character chatbots of historic figures, research assistants for projects, and study partner bots, MagicSchool is attempting to layer AI into each aspect of the learning journey.

Kira Learning, chaired by Andrew Ng, founder of the Google Brain team, has followed a similar trajectory. It launched in 2021 as a teacher support platform, helping teachers do things like create lessons and auto-grade work, and grew to hundreds of districts nationally. In April 2025, it also rolled out an AI tutor that offers real-time, 1:1 guidance to students directly. According to one report, “A.I. agents adapt to each student’s pace and mastery level, while grading is automated with instant feedback—giving educators time to focus on teaching.”

From Mathpresso’s MathGPT to SocratiQ, the adaptive STEM framework for Socratic learning, and Synthesis’ superhuman AI math tutor. The efforts to leverage AI in education are extensive.

But some of the best results have come from schools that have rethought the structure of education entirely. Alpha School is one example. Founded by MacKenzie Price in 2014, students participate in two hours a day of AI tutor-led classes. Human teachers are rebranded as guides, offering mentorship and acting as a sounding board for student projects. The other hours of the day for each student are focused on that type of project-based learning. Similar to the original Bloom study, Alpha School follows a mastery-learning approach where students go at their own pace and can’t progress to the next step until they pass a mastery test.

Alpha School claims its students are usually in the top 1-2% for their grade. In addition, Alpha School claims its students improve 2.6x faster than their peers on nationally normed MAP tests, which measure student progress throughout the year compared to where they began.

Again, every effort to measure AI’s impact in education is early. Some criticisms of Alpha School are that maybe their students score so high not because of the advantages of AI and the mastery model, but because they select the very best students. Every program that seeks to demonstrate the quality of AI will need to be heavily measured and scrutinized to ensure high quality outcomes. But that’s good! That’s what you want from innovative education. Measurable outcomes that can then be replicated elsewhere. If we’re already seeing signs of success this early, that’s one reason to be cautiously optimistic.

The Case For Cautious Optimism

Hunger for higher-quality education is apparent. Declining education outcomes and a seemingly unfixable education apparatus make people hungry for solutions. We’ve seen this kind of hunger before. In the early 2010s, massive open online courses (MOOCs) were all the rage. In 2012, Sal Khan, the founder of Khan Academy, predicted that “classrooms would some day be comprised ‘20 to 30 students working on all different things,’ moving at their own pace, with a teacher who simply ‘administers the chaos.’”

But from MOOCs to in-classroom laptops, there have always been lofty promises and limited outcomes when it comes to technology in education. But the impeccable fit between LLMs and personalized 1:1 tutoring is too good to ignore. It's true that AI won’t automatically solve the Bloom 2 Sigma Problem. If students think AI can do the thinking for them it won’t do any better than thinking you can learn something by just watching a dozen Khan Academy videos.

But as an assistant, rather than a crutch, AI has a better shot at improving student learning than any education technology in recent history.

Mux is a B2B video infrastructure platform that offers live and on-demand video hosting, storage, delivery, and analytics. To learn more, read our full memo here and check out some open roles below:

Video Software Engineer (Mid/Senior Level) - San Francisco, CA

Software Engineer (Distributed Systems Mid/Senior Level) - San Francisco, CA

Krea aims to be a next-generation creative copilot for artists, designers, and marketers, combining real-time image and video generation with intuitive, high-control workflows. To learn more, read our full memo here and check out some open roles below:

AI/ML Inference Engineer - San Francisco, CA

Front-end Engineer - San Francisco, CA

Check out some standout roles from this week.

Stripe | Seattle, WA • San Francisco, CA • Remote (US) - Backend Engineer (Developer Experience & Product Platform), Full Stack Engineer (Payments and Risk), Engineering Manager (Frontend Infrastructure)

Figma | San Francisco, CA • New York, NY - Software Engineer (Platform Engineering), Data Engineer, Product Manager (Data and AI Infrastructure), Software Engineer (Production Engineering)

Ramp | New York, NY • Miami, FL • San Francisco, CA • Remote (US) - Senior Software Engineer (Backend), Senior Software Engineer (Frontend), Software Engineer (Forward Deployed)

The Information reports Meta is in talks to hire Nat Friedman and Daniel Gross, weighing a buyout of their AI fund. If the talks are successful, Gross would leave Safe Superintelligence, which he co-founded with former OpenAI chief scientist Ilya Sutskever in 2024, to work “mostly on AI products” at Meta. Friedman’s remit is “expected to be broader.” This news comes as OpenAI CEO Sam Altman claims Meta has been offering “$100 million signing bonuses” to poach top AI talent.

xAI’s cash burn exceeds $1 billion a month. According to Bloomberg, xAI expects to burn $13 billion in 2025 and is lining up $4.3 billion in equity and $5 billion in debt to fund compute and data-center costs. It’s worth noting that Elon noted “Bloomberg is talking nonsense,” but did not explain further.

Amazon CEO says AI will shrink its corporate headcount. In a memo, Andy Jassy told employees that generative-AI “agents” will let Amazon “get more done with scrappier teams,” leading to fewer corporate roles over the next few years. The note comes after Amazon has laid off more than 27K employees since 2022 and follows other companies that have made similar proclamations about headcount efficiency due to AI, like JPMorgan Chase.

OpenAI and Microsoft tensions are reaching a “boiling point”. OpenAI executives have considered accusing Microsoft of anticompetitive behavior and requesting a federal review as the two renegotiate Microsoft’s future stake and revenue share. OpenAI and Microsoft are also at a standoff over OpenAI’s $3 billion acquisition of Windsurf, because OpenAI doesn’t want Microsoft to have access to Windsurf’s intellectual property.

Applied Intuition has raised $250 million at a $6 billion valuation to scale defense and autonomy software. The Series F round, backed by Lux, a16z, and Elad Gil, boosts the company’s capital as it expands simulation and AI tools for both commercial autonomy and US defense programs.

OpenAI secured a $200 million Pentagon contract to develop military AI tools. The deal with the Department of Defense’s Chief Digital and Artificial Intelligence Office will fund large-scale AI models for defense and government use, marking OpenAI’s formal entry into the federal sector with a new “OpenAI for Government” initiative.

Surge AI overtakes rival Scale AI with $1 billion in sales and zero VC backing. According to The Information, Surge AI recorded more revenue than Scale AI in 2024 and was profitable, despite being bootstrapped by founder Edwin Chen. This comes amidst reports that Scale AI clients, like OpenAI and Google, are winding down work with the company after its $14.3 billion investment from Meta last week.

Coatue released a 102-page “State of the Markets” deck for its annual East Meets West gathering. It argues that the economy is in a long-term AI supercycle, a self-reinforcing “AI Flywheel” will amplify productivity and lower the US’s debt-to-GDP, and US exceptionalism will continue.

In an interesting essay, Alex Komoroske argues against OpenAI’s vision for a centralized AI. Komoroske says Sam Altman’s “gentle singularity” would hand one company control over user memories and behavior, and instead urges “Private Intelligences” built on open, composable ecosystems where individuals own and port their data.

Andrej Karpathy’s “Software 3.0” talk says English is the hottest new programming language. As a follow-up to his viral “Software 2.0” blog post in 2017, Karpathy says large language models will serve as code-generation fabs, shifting developers from hand-writing functions to supervising “jagged-intelligence” systems, and ushering in a broad rewrite of today’s software stack while keeping humans in the verification loop.

Leaked GitHub shows President Trump’s team is prepping “AI.gov” launch for July 4. A now-deleted GSA repository outlined a whole-of-government platform with three parts—an AI chatbot, an API platform to integrate external models, and a “CONSOLE” dashboard to audit adoption.

An International Energy Agency (IEA) chart shows EVs already cutting China’s oil demand by one million barrels per day. Electric vehicles are displacing about one million barrels of oil per day in China, with the International Energy Agency projecting the figure could triple to roughly three million by 2030.

At Contrary Research, we’ve built the best starting place to understand private tech companies. We can't do it alone, nor would we want to. We focus on bringing together a variety of different perspectives.

That's why applications are open for our Research Fellowship. In the past, we've worked with software engineers, product managers, investors, and more. If you're interested in researching and writing about tech companies, apply here!